mirror of

https://github.com/Mintplex-Labs/anything-llm.git

synced 2025-04-17 18:18:11 +00:00

inital commit ⚡

This commit is contained in:

commit

27c58541bd

100 changed files with 5394 additions and 0 deletions

.gitignoreLICENSEREADME.mdclean.sh

collector

.env.example.gitignoreREADME.md

hotdir

main.pyrequirements.txtscripts

__init__.pygitbook.pylink.pylink_utils.pymedium.pymedium_utils.pysubstack.pysubstack_utils.pyutils.py

watch.pywatch

youtube.pyyt_utils.pyfrontend

.env.production.eslintrc.cjs.gitignore.nvmrcindex.htmljsconfig.jsonpackage.jsonpostcss.config.js

public

src

App.jsxAuthContext.jsxindex.cssmain.jsx

tailwind.config.jsvite.config.jscomponents

DefaultChat

Modals

Sidebar

UserIcon

WorkspaceChat

models

pages

utils

images

package.jsonserver

.env.example.gitignore.nvmrc

documents

endpoints

index.jsmodels

package.jsonutils

chats

files

http

middleware

openAi

pinecone

vector-cache

10

.gitignore

vendored

Normal file

10

.gitignore

vendored

Normal file

|

|

@ -0,0 +1,10 @@

|

|||

v-env

|

||||

.env

|

||||

!.env.example

|

||||

|

||||

node_modules

|

||||

__pycache__

|

||||

v-env

|

||||

*.lock

|

||||

.DS_Store

|

||||

|

||||

21

LICENSE

Normal file

21

LICENSE

Normal file

|

|

@ -0,0 +1,21 @@

|

|||

The MIT License

|

||||

|

||||

Copyright (c) Mintplex Labs Inc.

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in

|

||||

all copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

||||

THE SOFTWARE.

|

||||

59

README.md

Normal file

59

README.md

Normal file

|

|

@ -0,0 +1,59 @@

|

|||

# 🤖 AnythingLLM: A full-stack personalized AI assistant

|

||||

|

||||

[](https://twitter.com/tcarambat) [](https://discord.gg/6UyHPeGZAC)

|

||||

|

||||

A full-stack application and tool suite that enables you to turn any document, resource, or piece of content into a piece of data that any LLM can use as reference during chatting. This application runs with very minimal overhead as by default the LLM and vectorDB are hosted remotely, but can be swapped for local instances. Currently this project supports Pinecone and OpenAI.

|

||||

|

||||

|

||||

[view more screenshots](/images/screenshots/SCREENSHOTS.md)

|

||||

|

||||

### Watch the demo!

|

||||

|

||||

_tbd_

|

||||

|

||||

### Product Overview

|

||||

AnythingLLM aims to be a full-stack application where you can use commercial off-the-shelf LLMs with Long-term-memory solutions or use popular open source LLM and vectorDB solutions.

|

||||

|

||||

Anything LLM is a full-stack product that you can run locally as well as host remotely and be able to chat intelligently with any documents you provide it.

|

||||

|

||||

AnythingLLM divides your documents into objects called `workspaces`. A Workspace functions a lot like a thread, but with the addition of containerization of your documents. Workspaces can share documents, but they do not talk to each other so you can keep your context for each workspace clean.

|

||||

|

||||

Some cool features of AnythingLLM

|

||||

- Atomically manage documents to be used in long-term-memory from a simple UI

|

||||

- Two chat modes `conversation` and `query`. Conversation retains previous questions and amendments. Query is simple QA against your documents

|

||||

- Each chat response contains a citation that is linked to the original content

|

||||

- Simple technology stack for fast iteration

|

||||

- Fully capable of being hosted remotely

|

||||

- "Bring your own LLM" model and vector solution. _still in progress_

|

||||

- Extremely efficient cost-saving measures for managing very large documents. you'll never pay to embed a massive document or transcript more than once. 90% more cost effective than other LTM chatbots

|

||||

|

||||

### Technical Overview

|

||||

This monorepo consists of three main sections:

|

||||

- `collector`: Python tools that enable you to quickly convert online resources or local documents into LLM useable format.

|

||||

- `frontend`: A viteJS + React frontend that you can run to easily create and manage all your content the LLM can use.

|

||||

- `server`: A nodeJS + express server to handle all the interactions and do all the vectorDB management and LLM interactions.

|

||||

|

||||

### Requirements

|

||||

- `yarn` and `node` on your machine

|

||||

- `python` 3.8+ for running scripts in `collector/`.

|

||||

- access to an LLM like `GPT-3.5`, `GPT-4`*.

|

||||

- a [Pinecone.io](https://pinecone.io) free account*.

|

||||

*you can use drop in replacements for these. This is just the easiest to get up and running fast.

|

||||

|

||||

### How to get started

|

||||

- `yarn setup` from the project root directory.

|

||||

|

||||

This will fill in the required `.env` files you'll need in each of the application sections. Go fill those out before proceeding or else things won't work right.

|

||||

|

||||

Next, you will need some content to embed. This could be a Youtube Channel, Medium articles, local text files, word documents, and the list goes on. This is where you will use the `collector/` part of the repo.

|

||||

|

||||

[Go set up and run collector scripts](./collector/README.md)

|

||||

|

||||

[Learn about documents](./server/documents/DOCUMENTS.md)

|

||||

|

||||

[Learn about vector caching](./server/documents/VECTOR_CACHE.md)

|

||||

|

||||

### Contributing

|

||||

- create issue

|

||||

- create PR with branch name format of `<issue number>-<short name>`

|

||||

- yee haw let's merge

|

||||

2

clean.sh

Normal file

2

clean.sh

Normal file

|

|

@ -0,0 +1,2 @@

|

|||

# Easily kill process on port because sometimes nodemon fails to reboot

|

||||

kill -9 $(lsof -t -i tcp:5000)

|

||||

1

collector/.env.example

Normal file

1

collector/.env.example

Normal file

|

|

@ -0,0 +1 @@

|

|||

GOOGLE_APIS_KEY=

|

||||

6

collector/.gitignore

vendored

Normal file

6

collector/.gitignore

vendored

Normal file

|

|

@ -0,0 +1,6 @@

|

|||

outputs/*/*.json

|

||||

hotdir/*

|

||||

hotdir/processed/*

|

||||

!hotdir/__HOTDIR__.md

|

||||

!hotdir/processed

|

||||

|

||||

45

collector/README.md

Normal file

45

collector/README.md

Normal file

|

|

@ -0,0 +1,45 @@

|

|||

# How to collect data for vectorizing

|

||||

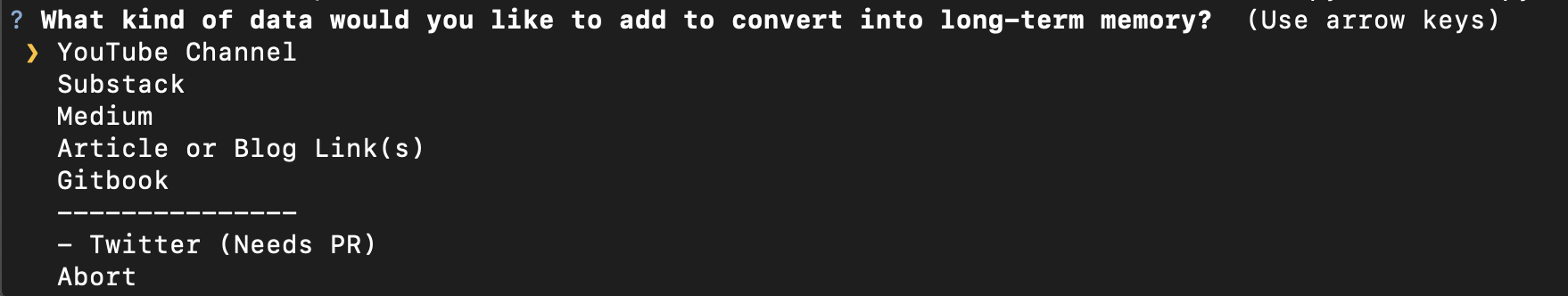

This process should be run first. This will enable you to collect a ton of data across various sources. Currently the following services are supported:

|

||||

- [x] YouTube Channels

|

||||

- [x] Medium

|

||||

- [x] Substack

|

||||

- [x] Arbitrary Link

|

||||

- [x] Gitbook

|

||||

- [x] Local Files (.txt, .pdf, etc) [See full list](./hotdir/__HOTDIR__.md)

|

||||

_these resources are under development or require PR_

|

||||

- Twitter

|

||||

|

||||

|

||||

### Requirements

|

||||

- [ ] Python 3.8+

|

||||

- [ ] Google Cloud Account (for YouTube channels)

|

||||

- [ ] `brew install pandoc` [pandoc](https://pandoc.org/installing.html) (for .ODT document processing)

|

||||

|

||||

### Setup

|

||||

This example will be using python3.9, but will work with 3.8+. Tested on MacOs. Untested on Windows

|

||||

- install virtualenv for python3.8+ first before any other steps. `python3.9 -m pip install virutalenv`

|

||||

- `cd collector` from root directory

|

||||

- `python3.9 -m virtualenv v-env`

|

||||

- `source v-env/bin/activate`

|

||||

- `pip install -r requirements.txt`

|

||||

- `cp .env.example .env`

|

||||

- `python main.py` for interactive collection or `python watch.py` to process local documents.

|

||||

- Select the option you want and follow follow the prompts - Done!

|

||||

- run `deactivate` to get back to regular shell

|

||||

|

||||

### Outputs

|

||||

All JSON file data is cached in the `output/` folder. This is to prevent redundant API calls to services which may have rate limits to quota caps. Clearing out the `output/` folder will execute the script as if there was no cache.

|

||||

|

||||

As files are processed you will see data being written to both the `collector/outputs` folder as well as the `server/documents` folder. Later in this process, once you boot up the server you will then bulk vectorize this content from a simple UI!

|

||||

|

||||

If collection fails at any point in the process it will pick up where it last bailed out so you are not reusing credits.

|

||||

|

||||

### How to get a Google Cloud API Key (YouTube data collection only)

|

||||

**required to fetch YouTube transcripts and data**

|

||||

- Have a google account

|

||||

- [Visit the GCP Cloud Console](https://console.cloud.google.com/welcome)

|

||||

- Click on dropdown in top right > Create new project. Name it whatever you like

|

||||

-

|

||||

- [Enable YouTube Data APIV3](https://console.cloud.google.com/apis/library/youtube.googleapis.com)

|

||||

- Once enabled generate a Credential key for this API

|

||||

- Paste your key after `GOOGLE_APIS_KEY=` in your `collector/.env` file.

|

||||

17

collector/hotdir/__HOTDIR__.md

Normal file

17

collector/hotdir/__HOTDIR__.md

Normal file

|

|

@ -0,0 +1,17 @@

|

|||

### What is the "Hot directory"

|

||||

|

||||

This is the location where you can dump all supported file types and have them automatically converted and prepared to be digested by the vectorizing service and selected from the AnythingLLM frontend.

|

||||

|

||||

Files dropped in here will only be processed when you are running `python watch.py` from the `collector` directory.

|

||||

|

||||

Once converted the original file will be moved to the `hotdir/processed` folder so that the original document is still able to be linked to when referenced when attached as a source document during chatting.

|

||||

|

||||

**Supported File types**

|

||||

- `.md`

|

||||

- `.text`

|

||||

- `.pdf`

|

||||

|

||||

__requires more development__

|

||||

- `.png .jpg etc`

|

||||

- `.mp3`

|

||||

- `.mp4`

|

||||

81

collector/main.py

Normal file

81

collector/main.py

Normal file

|

|

@ -0,0 +1,81 @@

|

|||

import os

|

||||

from whaaaaat import prompt, Separator

|

||||

from scripts.youtube import youtube

|

||||

from scripts.link import link, links

|

||||

from scripts.substack import substack

|

||||

from scripts.medium import medium

|

||||

from scripts.gitbook import gitbook

|

||||

|

||||

def main():

|

||||

if os.name == 'nt':

|

||||

methods = {

|

||||

'1': 'YouTube Channel',

|

||||

'2': 'Article or Blog Link',

|

||||

'3': 'Substack',

|

||||

'4': 'Medium',

|

||||

'5': 'Gitbook'

|

||||

}

|

||||

print("There are options for data collection to make this easier for you.\nType the number of the method you wish to execute.")

|

||||

print("1. YouTube Channel\n2. Article or Blog Link (Single)\n3. Substack\n4. Medium\n\n[In development]:\nTwitter\n\n")

|

||||

selection = input("Your selection: ")

|

||||

method = methods.get(str(selection))

|

||||

else:

|

||||

questions = [

|

||||

{

|

||||

"type": "list",

|

||||

"name": "collector",

|

||||

"message": "What kind of data would you like to add to convert into long-term memory?",

|

||||

"choices": [

|

||||

"YouTube Channel",

|

||||

"Substack",

|

||||

"Medium",

|

||||

"Article or Blog Link(s)",

|

||||

"Gitbook",

|

||||

Separator(),

|

||||

{"name": "Twitter", "disabled": "Needs PR"},

|

||||

"Abort",

|

||||

],

|

||||

},

|

||||

]

|

||||

method = prompt(questions).get('collector')

|

||||

|

||||

if('Article or Blog Link' in method):

|

||||

questions = [

|

||||

{

|

||||

"type": "list",

|

||||

"name": "collector",

|

||||

"message": "Do you want to scrape a single article/blog/url or many at once?",

|

||||

"choices": [

|

||||

'Single URL',

|

||||

'Multiple URLs',

|

||||

'Abort',

|

||||

],

|

||||

},

|

||||

]

|

||||

method = prompt(questions).get('collector')

|

||||

if(method == 'Single URL'):

|

||||

link()

|

||||

exit(0)

|

||||

if(method == 'Multiple URLs'):

|

||||

links()

|

||||

exit(0)

|

||||

|

||||

if(method == 'Abort'): exit(0)

|

||||

if(method == 'YouTube Channel'):

|

||||

youtube()

|

||||

exit(0)

|

||||

if(method == 'Substack'):

|

||||

substack()

|

||||

exit(0)

|

||||

if(method == 'Medium'):

|

||||

medium()

|

||||

exit(0)

|

||||

if(method == 'Gitbook'):

|

||||

gitbook()

|

||||

exit(0)

|

||||

|

||||

print("Selection was not valid.")

|

||||

exit(1)

|

||||

|

||||

if __name__ == "__main__":

|

||||

main()

|

||||

221

collector/requirements.txt

Normal file

221

collector/requirements.txt

Normal file

|

|

@ -0,0 +1,221 @@

|

|||

about-time==4.2.1

|

||||

aiohttp==3.8.4

|

||||

aiosignal==1.3.1

|

||||

alive-progress==3.1.2

|

||||

anyio==3.7.0

|

||||

appdirs==1.4.4

|

||||

argilla==1.8.0

|

||||

async-timeout==4.0.2

|

||||

attrs==23.1.0

|

||||

backoff==2.2.1

|

||||

beautifulsoup4==4.12.2

|

||||

bs4==0.0.1

|

||||

certifi==2023.5.7

|

||||

cffi==1.15.1

|

||||

chardet==5.1.0

|

||||

charset-normalizer==3.1.0

|

||||

click==8.1.3

|

||||

commonmark==0.9.1

|

||||

cryptography==41.0.1

|

||||

cssselect==1.2.0

|

||||

dataclasses-json==0.5.7

|

||||

Deprecated==1.2.14

|

||||

et-xmlfile==1.1.0

|

||||

exceptiongroup==1.1.1

|

||||

fake-useragent==1.1.3

|

||||

frozenlist==1.3.3

|

||||

grapheme==0.6.0

|

||||

greenlet==2.0.2

|

||||

h11==0.14.0

|

||||

httpcore==0.16.3

|

||||

httpx==0.23.3

|

||||

idna==3.4

|

||||

importlib-metadata==6.6.0

|

||||

importlib-resources==5.12.0

|

||||

install==1.3.5

|

||||

joblib==1.2.0

|

||||

langchain==0.0.189

|

||||

lxml==4.9.2

|

||||

Markdown==3.4.3

|

||||

marshmallow==3.19.0

|

||||

marshmallow-enum==1.5.1

|

||||

monotonic==1.6

|

||||

msg-parser==1.2.0

|

||||

multidict==6.0.4

|

||||

mypy-extensions==1.0.0

|

||||

nltk==3.8.1

|

||||

numexpr==2.8.4

|

||||

numpy==1.23.5

|

||||

olefile==0.46

|

||||

openapi-schema-pydantic==1.2.4

|

||||

openpyxl==3.1.2

|

||||

packaging==23.1

|

||||

pandas==1.5.3

|

||||

parse==1.19.0

|

||||

pdfminer.six==20221105

|

||||

Pillow==9.5.0

|

||||

prompt-toolkit==1.0.14

|

||||

pycparser==2.21

|

||||

pydantic==1.10.8

|

||||

pyee==8.2.2

|

||||

Pygments==2.15.1

|

||||

pyobjc==9.1.1

|

||||

pyobjc-core==9.1.1

|

||||

pyobjc-framework-Accounts==9.1.1

|

||||

pyobjc-framework-AddressBook==9.1.1

|

||||

pyobjc-framework-AdSupport==9.1.1

|

||||

pyobjc-framework-AppleScriptKit==9.1.1

|

||||

pyobjc-framework-AppleScriptObjC==9.1.1

|

||||

pyobjc-framework-ApplicationServices==9.1.1

|

||||

pyobjc-framework-AudioVideoBridging==9.1.1

|

||||

pyobjc-framework-AuthenticationServices==9.1.1

|

||||

pyobjc-framework-AutomaticAssessmentConfiguration==9.1.1

|

||||

pyobjc-framework-Automator==9.1.1

|

||||

pyobjc-framework-AVFoundation==9.1.1

|

||||

pyobjc-framework-AVKit==9.1.1

|

||||

pyobjc-framework-BusinessChat==9.1.1

|

||||

pyobjc-framework-CalendarStore==9.1.1

|

||||

pyobjc-framework-CFNetwork==9.1.1

|

||||

pyobjc-framework-CloudKit==9.1.1

|

||||

pyobjc-framework-Cocoa==9.1.1

|

||||

pyobjc-framework-Collaboration==9.1.1

|

||||

pyobjc-framework-ColorSync==9.1.1

|

||||

pyobjc-framework-Contacts==9.1.1

|

||||

pyobjc-framework-ContactsUI==9.1.1

|

||||

pyobjc-framework-CoreAudio==9.1.1

|

||||

pyobjc-framework-CoreAudioKit==9.1.1

|

||||

pyobjc-framework-CoreBluetooth==9.1.1

|

||||

pyobjc-framework-CoreData==9.1.1

|

||||

pyobjc-framework-CoreHaptics==9.1.1

|

||||

pyobjc-framework-CoreLocation==9.1.1

|

||||

pyobjc-framework-CoreMedia==9.1.1

|

||||

pyobjc-framework-CoreMediaIO==9.1.1

|

||||

pyobjc-framework-CoreMIDI==9.1.1

|

||||

pyobjc-framework-CoreML==9.1.1

|

||||

pyobjc-framework-CoreMotion==9.1.1

|

||||

pyobjc-framework-CoreServices==9.1.1

|

||||

pyobjc-framework-CoreSpotlight==9.1.1

|

||||

pyobjc-framework-CoreText==9.1.1

|

||||

pyobjc-framework-CoreWLAN==9.1.1

|

||||

pyobjc-framework-CryptoTokenKit==9.1.1

|

||||

pyobjc-framework-DeviceCheck==9.1.1

|

||||

pyobjc-framework-DictionaryServices==9.1.1

|

||||

pyobjc-framework-DiscRecording==9.1.1

|

||||

pyobjc-framework-DiscRecordingUI==9.1.1

|

||||

pyobjc-framework-DiskArbitration==9.1.1

|

||||

pyobjc-framework-DVDPlayback==9.1.1

|

||||

pyobjc-framework-EventKit==9.1.1

|

||||

pyobjc-framework-ExceptionHandling==9.1.1

|

||||

pyobjc-framework-ExecutionPolicy==9.1.1

|

||||

pyobjc-framework-ExternalAccessory==9.1.1

|

||||

pyobjc-framework-FileProvider==9.1.1

|

||||

pyobjc-framework-FileProviderUI==9.1.1

|

||||

pyobjc-framework-FinderSync==9.1.1

|

||||

pyobjc-framework-FSEvents==9.1.1

|

||||

pyobjc-framework-GameCenter==9.1.1

|

||||

pyobjc-framework-GameController==9.1.1

|

||||

pyobjc-framework-GameKit==9.1.1

|

||||

pyobjc-framework-GameplayKit==9.1.1

|

||||

pyobjc-framework-ImageCaptureCore==9.1.1

|

||||

pyobjc-framework-IMServicePlugIn==9.1.1

|

||||

pyobjc-framework-InputMethodKit==9.1.1

|

||||

pyobjc-framework-InstallerPlugins==9.1.1

|

||||

pyobjc-framework-InstantMessage==9.1.1

|

||||

pyobjc-framework-Intents==9.1.1

|

||||

pyobjc-framework-IOBluetooth==9.1.1

|

||||

pyobjc-framework-IOBluetoothUI==9.1.1

|

||||

pyobjc-framework-IOSurface==9.1.1

|

||||

pyobjc-framework-iTunesLibrary==9.1.1

|

||||

pyobjc-framework-LatentSemanticMapping==9.1.1

|

||||

pyobjc-framework-LaunchServices==9.1.1

|

||||

pyobjc-framework-libdispatch==9.1.1

|

||||

pyobjc-framework-libxpc==9.1.1

|

||||

pyobjc-framework-LinkPresentation==9.1.1

|

||||

pyobjc-framework-LocalAuthentication==9.1.1

|

||||

pyobjc-framework-MapKit==9.1.1

|

||||

pyobjc-framework-MediaAccessibility==9.1.1

|

||||

pyobjc-framework-MediaLibrary==9.1.1

|

||||

pyobjc-framework-MediaPlayer==9.1.1

|

||||

pyobjc-framework-MediaToolbox==9.1.1

|

||||

pyobjc-framework-Metal==9.1.1

|

||||

pyobjc-framework-MetalKit==9.1.1

|

||||

pyobjc-framework-MetalPerformanceShaders==9.1.1

|

||||

pyobjc-framework-ModelIO==9.1.1

|

||||

pyobjc-framework-MultipeerConnectivity==9.1.1

|

||||

pyobjc-framework-NaturalLanguage==9.1.1

|

||||

pyobjc-framework-NetFS==9.1.1

|

||||

pyobjc-framework-Network==9.1.1

|

||||

pyobjc-framework-NetworkExtension==9.1.1

|

||||

pyobjc-framework-NotificationCenter==9.1.1

|

||||

pyobjc-framework-OpenDirectory==9.1.1

|

||||

pyobjc-framework-OSAKit==9.1.1

|

||||

pyobjc-framework-OSLog==9.1.1

|

||||

pyobjc-framework-PencilKit==9.1.1

|

||||

pyobjc-framework-Photos==9.1.1

|

||||

pyobjc-framework-PhotosUI==9.1.1

|

||||

pyobjc-framework-PreferencePanes==9.1.1

|

||||

pyobjc-framework-PushKit==9.1.1

|

||||

pyobjc-framework-Quartz==9.1.1

|

||||

pyobjc-framework-QuickLookThumbnailing==9.1.1

|

||||

pyobjc-framework-SafariServices==9.1.1

|

||||

pyobjc-framework-SceneKit==9.1.1

|

||||

pyobjc-framework-ScreenSaver==9.1.1

|

||||

pyobjc-framework-ScriptingBridge==9.1.1

|

||||

pyobjc-framework-SearchKit==9.1.1

|

||||

pyobjc-framework-Security==9.1.1

|

||||

pyobjc-framework-SecurityFoundation==9.1.1

|

||||

pyobjc-framework-SecurityInterface==9.1.1

|

||||

pyobjc-framework-ServiceManagement==9.1.1

|

||||

pyobjc-framework-Social==9.1.1

|

||||

pyobjc-framework-SoundAnalysis==9.1.1

|

||||

pyobjc-framework-Speech==9.1.1

|

||||

pyobjc-framework-SpriteKit==9.1.1

|

||||

pyobjc-framework-StoreKit==9.1.1

|

||||

pyobjc-framework-SyncServices==9.1.1

|

||||

pyobjc-framework-SystemConfiguration==9.1.1

|

||||

pyobjc-framework-SystemExtensions==9.1.1

|

||||

pyobjc-framework-UserNotifications==9.1.1

|

||||

pyobjc-framework-VideoSubscriberAccount==9.1.1

|

||||

pyobjc-framework-VideoToolbox==9.1.1

|

||||

pyobjc-framework-Vision==9.1.1

|

||||

pyobjc-framework-WebKit==9.1.1

|

||||

pypandoc==1.11

|

||||

pyppeteer==1.0.2

|

||||

pyquery==2.0.0

|

||||

python-dateutil==2.8.2

|

||||

python-docx==0.8.11

|

||||

python-dotenv==0.21.1

|

||||

python-magic==0.4.27

|

||||

python-pptx==0.6.21

|

||||

python-slugify==8.0.1

|

||||

pytz==2023.3

|

||||

PyYAML==6.0

|

||||

regex==2023.5.5

|

||||

requests==2.31.0

|

||||

requests-html==0.10.0

|

||||

rfc3986==1.5.0

|

||||

rich==13.0.1

|

||||

six==1.16.0

|

||||

sniffio==1.3.0

|

||||

soupsieve==2.4.1

|

||||

SQLAlchemy==2.0.15

|

||||

tenacity==8.2.2

|

||||

text-unidecode==1.3

|

||||

tiktoken==0.4.0

|

||||

tqdm==4.65.0

|

||||

typer==0.9.0

|

||||

typing-inspect==0.9.0

|

||||

typing_extensions==4.6.3

|

||||

unstructured==0.7.1

|

||||

urllib3==1.26.16

|

||||

uuid==1.30

|

||||

w3lib==2.1.1

|

||||

wcwidth==0.2.6

|

||||

websockets==10.4

|

||||

whaaaaat==0.5.2

|

||||

wrapt==1.14.1

|

||||

xlrd==2.0.1

|

||||

XlsxWriter==3.1.2

|

||||

yarl==1.9.2

|

||||

youtube-transcript-api==0.6.0

|

||||

zipp==3.15.0

|

||||

0

collector/scripts/__init__.py

Normal file

0

collector/scripts/__init__.py

Normal file

44

collector/scripts/gitbook.py

Normal file

44

collector/scripts/gitbook.py

Normal file

|

|

@ -0,0 +1,44 @@

|

|||

import os, json

|

||||

from langchain.document_loaders import GitbookLoader

|

||||

from urllib.parse import urlparse

|

||||

from datetime import datetime

|

||||

from alive_progress import alive_it

|

||||

from .utils import tokenize

|

||||

from uuid import uuid4

|

||||

|

||||

def gitbook():

|

||||

url = input("Enter the URL of the GitBook you want to collect: ")

|

||||

if(url == ''):

|

||||

print("Not a gitbook URL")

|

||||

exit(1)

|

||||

|

||||

primary_source = urlparse(url)

|

||||

output_path = f"./outputs/gitbook-logs/{primary_source.netloc}"

|

||||

transaction_output_dir = f"../server/documents/gitbook-{primary_source.netloc}"

|

||||

|

||||

if os.path.exists(output_path) == False:os.makedirs(output_path)

|

||||

if os.path.exists(transaction_output_dir) == False: os.makedirs(transaction_output_dir)

|

||||

loader = GitbookLoader(url, load_all_paths= primary_source.path in ['','/'])

|

||||

for doc in alive_it(loader.load()):

|

||||

metadata = doc.metadata

|

||||

content = doc.page_content

|

||||

source = urlparse(metadata.get('source'))

|

||||

name = 'home' if source.path in ['','/'] else source.path.replace('/','_')

|

||||

output_filename = f"doc-{name}.json"

|

||||

transaction_output_filename = f"doc-{name}.json"

|

||||

data = {

|

||||

'id': str(uuid4()),

|

||||

'url': metadata.get('source'),

|

||||

"title": metadata.get('title'),

|

||||

"description": metadata.get('title'),

|

||||

"published": datetime.today().strftime('%Y-%m-%d %H:%M:%S'),

|

||||

"wordCount": len(content),

|

||||

'pageContent': content,

|

||||

'token_count_estimate': len(tokenize(content))

|

||||

}

|

||||

|

||||

with open(f"{output_path}/{output_filename}", 'w', encoding='utf-8') as file:

|

||||

json.dump(data, file, ensure_ascii=True, indent=4)

|

||||

|

||||

with open(f"{transaction_output_dir}/{transaction_output_filename}", 'w', encoding='utf-8') as file:

|

||||

json.dump(data, file, ensure_ascii=True, indent=4)

|

||||

139

collector/scripts/link.py

Normal file

139

collector/scripts/link.py

Normal file

|

|

@ -0,0 +1,139 @@

|

|||

import os, json, tempfile

|

||||

from urllib.parse import urlparse

|

||||

from requests_html import HTMLSession

|

||||

from langchain.document_loaders import UnstructuredHTMLLoader

|

||||

from .link_utils import append_meta

|

||||

from .utils import tokenize, ada_v2_cost

|

||||

|

||||

# Example Channel URL https://tim.blog/2022/08/09/nft-insider-trading-policy/

|

||||

def link():

|

||||

print("[NOTICE]: The first time running this process it will download supporting libraries.\n\n")

|

||||

fqdn_link = input("Paste in the URL of an online article or blog: ")

|

||||

if(len(fqdn_link) == 0):

|

||||

print("Invalid URL!")

|

||||

exit(1)

|

||||

|

||||

session = HTMLSession()

|

||||

req = session.get(fqdn_link)

|

||||

if(req.ok == False):

|

||||

print("Could not reach this url!")

|

||||

exit(1)

|

||||

|

||||

req.html.render()

|

||||

full_text = None

|

||||

with tempfile.NamedTemporaryFile(mode = "w") as tmp:

|

||||

tmp.write(req.html.html)

|

||||

tmp.seek(0)

|

||||

loader = UnstructuredHTMLLoader(tmp.name)

|

||||

data = loader.load()[0]

|

||||

full_text = data.page_content

|

||||

tmp.close()

|

||||

|

||||

link = append_meta(req, full_text, True)

|

||||

if(len(full_text) > 0):

|

||||

source = urlparse(req.url)

|

||||

output_filename = f"website-{source.netloc}-{source.path.replace('/','_')}.json"

|

||||

output_path = f"./outputs/website-logs"

|

||||

|

||||

transaction_output_filename = f"article-{source.path.replace('/','_')}.json"

|

||||

transaction_output_dir = f"../server/documents/website-{source.netloc}"

|

||||

|

||||

if os.path.isdir(output_path) == False:

|

||||

os.makedirs(output_path)

|

||||

|

||||

if os.path.isdir(transaction_output_dir) == False:

|

||||

os.makedirs(transaction_output_dir)

|

||||

|

||||

full_text = append_meta(req, full_text)

|

||||

tokenCount = len(tokenize(full_text))

|

||||

link['pageContent'] = full_text

|

||||

link['token_count_estimate'] = tokenCount

|

||||

|

||||

with open(f"{output_path}/{output_filename}", 'w', encoding='utf-8') as file:

|

||||

json.dump(link, file, ensure_ascii=True, indent=4)

|

||||

|

||||

with open(f"{transaction_output_dir}/{transaction_output_filename}", 'w', encoding='utf-8') as file:

|

||||

json.dump(link, file, ensure_ascii=True, indent=4)

|

||||

else:

|

||||

print("Could not parse any meaningful data from this link or url.")

|

||||

exit(1)

|

||||

|

||||

print(f"\n\n[Success]: article or link content fetched!")

|

||||

print(f"////////////////////////////")

|

||||

print(f"Your estimated cost to embed this data using OpenAI's text-embedding-ada-002 model at $0.0004 / 1K tokens will cost {ada_v2_cost(tokenCount)} using {tokenCount} tokens.")

|

||||

print(f"////////////////////////////")

|

||||

exit(0)

|

||||

|

||||

def links():

|

||||

links = []

|

||||

prompt = "Paste in the URL of an online article or blog: "

|

||||

done = False

|

||||

|

||||

while(done == False):

|

||||

new_link = input(prompt)

|

||||

if(len(new_link) == 0):

|

||||

done = True

|

||||

links = [*set(links)]

|

||||

continue

|

||||

|

||||

links.append(new_link)

|

||||

prompt = f"\n{len(links)} links in queue. Submit an empty value when done pasting in links to execute collection.\nPaste in the next URL of an online article or blog: "

|

||||

|

||||

if(len(links) == 0):

|

||||

print("No valid links provided!")

|

||||

exit(1)

|

||||

|

||||

totalTokens = 0

|

||||

for link in links:

|

||||

print(f"Working on {link}...")

|

||||

session = HTMLSession()

|

||||

req = session.get(link)

|

||||

if(req.ok == False):

|

||||

print(f"Could not reach {link} - skipping!")

|

||||

continue

|

||||

|

||||

req.html.render()

|

||||

full_text = None

|

||||

with tempfile.NamedTemporaryFile(mode = "w") as tmp:

|

||||

tmp.write(req.html.html)

|

||||

tmp.seek(0)

|

||||

loader = UnstructuredHTMLLoader(tmp.name)

|

||||

data = loader.load()[0]

|

||||

full_text = data.page_content

|

||||

tmp.close()

|

||||

|

||||

link = append_meta(req, full_text, True)

|

||||

if(len(full_text) > 0):

|

||||

source = urlparse(req.url)

|

||||

output_filename = f"website-{source.netloc}-{source.path.replace('/','_')}.json"

|

||||

output_path = f"./outputs/website-logs"

|

||||

|

||||

transaction_output_filename = f"article-{source.path.replace('/','_')}.json"

|

||||

transaction_output_dir = f"../server/documents/website-{source.netloc}"

|

||||

|

||||

if os.path.isdir(output_path) == False:

|

||||

os.makedirs(output_path)

|

||||

|

||||

if os.path.isdir(transaction_output_dir) == False:

|

||||

os.makedirs(transaction_output_dir)

|

||||

|

||||

full_text = append_meta(req, full_text)

|

||||

tokenCount = len(tokenize(full_text))

|

||||

link['pageContent'] = full_text

|

||||

link['token_count_estimate'] = tokenCount

|

||||

totalTokens += tokenCount

|

||||

|

||||

with open(f"{output_path}/{output_filename}", 'w', encoding='utf-8') as file:

|

||||

json.dump(link, file, ensure_ascii=True, indent=4)

|

||||

|

||||

with open(f"{transaction_output_dir}/{transaction_output_filename}", 'w', encoding='utf-8') as file:

|

||||

json.dump(link, file, ensure_ascii=True, indent=4)

|

||||

else:

|

||||

print(f"Could not parse any meaningful data from {link}.")

|

||||

continue

|

||||

|

||||

print(f"\n\n[Success]: {len(links)} article or link contents fetched!")

|

||||

print(f"////////////////////////////")

|

||||

print(f"Your estimated cost to embed this data using OpenAI's text-embedding-ada-002 model at $0.0004 / 1K tokens will cost {ada_v2_cost(totalTokens)} using {totalTokens} tokens.")

|

||||

print(f"////////////////////////////")

|

||||

exit(0)

|

||||

14

collector/scripts/link_utils.py

Normal file

14

collector/scripts/link_utils.py

Normal file

|

|

@ -0,0 +1,14 @@

|

|||

import json

|

||||

from datetime import datetime

|

||||

from dotenv import load_dotenv

|

||||

load_dotenv()

|

||||

|

||||

def append_meta(request, text, metadata_only = False):

|

||||

meta = {

|

||||

'url': request.url,

|

||||

'title': request.html.find('title', first=True).text if len(request.html.find('title')) != 0 else '',

|

||||

'description': request.html.find('meta[name="description"]', first=True).attrs.get('content') if request.html.find('meta[name="description"]', first=True) != None else '',

|

||||

'published':request.html.find('meta[property="article:published_time"]', first=True).attrs.get('content') if request.html.find('meta[property="article:published_time"]', first=True) != None else datetime.today().strftime('%Y-%m-%d %H:%M:%S'),

|

||||

'wordCount': len(text.split(' ')),

|

||||

}

|

||||

return "Article JSON Metadata:\n"+json.dumps(meta)+"\n\n\nText Content:\n" + text if metadata_only == False else meta

|

||||

71

collector/scripts/medium.py

Normal file

71

collector/scripts/medium.py

Normal file

|

|

@ -0,0 +1,71 @@

|

|||

import os, json

|

||||

from urllib.parse import urlparse

|

||||

from .utils import tokenize, ada_v2_cost

|

||||

from .medium_utils import get_username, fetch_recent_publications, append_meta

|

||||

from alive_progress import alive_it

|

||||

|

||||

# Example medium URL: https://medium.com/@yujiangtham or https://davidall.medium.com

|

||||

def medium():

|

||||

print("[NOTICE]: This method will only get the 10 most recent publishings.")

|

||||

author_url = input("Enter the medium URL of the author you want to collect: ")

|

||||

if(author_url == ''):

|

||||

print("Not a valid medium.com/@author URL")

|

||||

exit(1)

|

||||

|

||||

handle = get_username(author_url)

|

||||

if(handle is None):

|

||||

print("This does not appear to be a valid medium.com/@author URL")

|

||||

exit(1)

|

||||

|

||||

publications = fetch_recent_publications(handle)

|

||||

if(len(publications)==0):

|

||||

print("There are no public or free publications by this creator - nothing to collect.")

|

||||

exit(1)

|

||||

|

||||

totalTokenCount = 0

|

||||

transaction_output_dir = f"../server/documents/medium-{handle}"

|

||||

if os.path.isdir(transaction_output_dir) == False:

|

||||

os.makedirs(transaction_output_dir)

|

||||

|

||||

for publication in alive_it(publications):

|

||||

pub_file_path = transaction_output_dir + f"/publication-{publication.get('id')}.json"

|

||||

if os.path.exists(pub_file_path) == True: continue

|

||||

|

||||

full_text = publication.get('pageContent')

|

||||

if full_text is None or len(full_text) == 0: continue

|

||||

|

||||

full_text = append_meta(publication, full_text)

|

||||

item = {

|

||||

'id': publication.get('id'),

|

||||

'url': publication.get('url'),

|

||||

'title': publication.get('title'),

|

||||

'published': publication.get('published'),

|

||||

'wordCount': len(full_text.split(' ')),

|

||||

'pageContent': full_text,

|

||||

}

|

||||

|

||||

tokenCount = len(tokenize(full_text))

|

||||

item['token_count_estimate'] = tokenCount

|

||||

|

||||

totalTokenCount += tokenCount

|

||||

with open(pub_file_path, 'w', encoding='utf-8') as file:

|

||||

json.dump(item, file, ensure_ascii=True, indent=4)

|

||||

|

||||

print(f"[Success]: {len(publications)} scraped and fetched!")

|

||||

print(f"\n\n////////////////////////////")

|

||||

print(f"Your estimated cost to embed all of this data using OpenAI's text-embedding-ada-002 model at $0.0004 / 1K tokens will cost {ada_v2_cost(totalTokenCount)} using {totalTokenCount} tokens.")

|

||||

print(f"////////////////////////////\n\n")

|

||||

exit(0)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

71

collector/scripts/medium_utils.py

Normal file

71

collector/scripts/medium_utils.py

Normal file

|

|

@ -0,0 +1,71 @@

|

|||

import os, json, requests, re

|

||||

from bs4 import BeautifulSoup

|

||||

|

||||

def get_username(author_url):

|

||||

if '@' in author_url:

|

||||

pattern = r"medium\.com/@([\w-]+)"

|

||||

match = re.search(pattern, author_url)

|

||||

return match.group(1) if match else None

|

||||

else:

|

||||

# Given subdomain

|

||||

pattern = r"([\w-]+).medium\.com"

|

||||

match = re.search(pattern, author_url)

|

||||

return match.group(1) if match else None

|

||||

|

||||

def get_docid(medium_docpath):

|

||||

pattern = r"medium\.com/p/([\w-]+)"

|

||||

match = re.search(pattern, medium_docpath)

|

||||

return match.group(1) if match else None

|

||||

|

||||

def fetch_recent_publications(handle):

|

||||

rss_link = f"https://medium.com/feed/@{handle}"

|

||||

response = requests.get(rss_link)

|

||||

if(response.ok == False):

|

||||

print(f"Could not fetch RSS results for author.")

|

||||

return []

|

||||

|

||||

xml = response.content

|

||||

soup = BeautifulSoup(xml, 'xml')

|

||||

items = soup.find_all('item')

|

||||

publications = []

|

||||

|

||||

if os.path.isdir("./outputs/medium-logs") == False:

|

||||

os.makedirs("./outputs/medium-logs")

|

||||

|

||||

file_path = f"./outputs/medium-logs/medium-{handle}.json"

|

||||

|

||||

if os.path.exists(file_path):

|

||||

with open(file_path, "r") as file:

|

||||

print(f"Returning cached data for Author {handle}. If you do not wish to use stored data then delete the file for this author to allow refetching.")

|

||||

return json.load(file)

|

||||

|

||||

for item in items:

|

||||

tags = []

|

||||

for tag in item.find_all('category'): tags.append(tag.text)

|

||||

content = BeautifulSoup(item.find('content:encoded').text, 'html.parser')

|

||||

data = {

|

||||

'id': get_docid(item.find('guid').text),

|

||||

'title': item.find('title').text,

|

||||

'url': item.find('link').text.split('?')[0],

|

||||

'tags': ','.join(tags),

|

||||

'published': item.find('pubDate').text,

|

||||

'pageContent': content.get_text()

|

||||

}

|

||||

publications.append(data)

|

||||

|

||||

with open(file_path, 'w+', encoding='utf-8') as json_file:

|

||||

json.dump(publications, json_file, ensure_ascii=True, indent=2)

|

||||

print(f"{len(publications)} articles found for author medium.com/@{handle}. Saved to medium-logs/medium-{handle}.json")

|

||||

|

||||

return publications

|

||||

|

||||

def append_meta(publication, text):

|

||||

meta = {

|

||||

'url': publication.get('url'),

|

||||

'tags': publication.get('tags'),

|

||||

'title': publication.get('title'),

|

||||

'createdAt': publication.get('published'),

|

||||

'wordCount': len(text.split(' '))

|

||||

}

|

||||

return "Article Metadata:\n"+json.dumps(meta)+"\n\nArticle Content:\n" + text

|

||||

|

||||

78

collector/scripts/substack.py

Normal file

78

collector/scripts/substack.py

Normal file

|

|

@ -0,0 +1,78 @@

|

|||

import os, json

|

||||

from urllib.parse import urlparse

|

||||

from .utils import tokenize, ada_v2_cost

|

||||

from .substack_utils import fetch_all_publications, only_valid_publications, get_content, append_meta

|

||||

from alive_progress import alive_it

|

||||

|

||||

# Example substack URL: https://swyx.substack.com/

|

||||

def substack():

|

||||

author_url = input("Enter the substack URL of the author you want to collect: ")

|

||||

if(author_url == ''):

|

||||

print("Not a valid author.substack.com URL")

|

||||

exit(1)

|

||||

|

||||

source = urlparse(author_url)

|

||||

if('substack.com' not in source.netloc or len(source.netloc.split('.')) != 3):

|

||||

print("This does not appear to be a valid author.substack.com URL")

|

||||

exit(1)

|

||||

|

||||

subdomain = source.netloc.split('.')[0]

|

||||

publications = fetch_all_publications(subdomain)

|

||||

valid_publications = only_valid_publications(publications)

|

||||

|

||||

if(len(valid_publications)==0):

|

||||

print("There are no public or free preview newsletters by this creator - nothing to collect.")

|

||||

exit(1)

|

||||

|

||||

print(f"{len(valid_publications)} of {len(publications)} publications are readable publically text posts - collecting those.")

|

||||

|

||||

totalTokenCount = 0

|

||||

transaction_output_dir = f"../server/documents/substack-{subdomain}"

|

||||

if os.path.isdir(transaction_output_dir) == False:

|

||||

os.makedirs(transaction_output_dir)

|

||||

|

||||

for publication in alive_it(valid_publications):

|

||||

pub_file_path = transaction_output_dir + f"/publication-{publication.get('id')}.json"

|

||||

if os.path.exists(pub_file_path) == True: continue

|

||||

|

||||

full_text = get_content(publication.get('canonical_url'))

|

||||

if full_text is None or len(full_text) == 0: continue

|

||||

|

||||

full_text = append_meta(publication, full_text)

|

||||

item = {

|

||||

'id': publication.get('id'),

|

||||

'url': publication.get('canonical_url'),

|

||||

'thumbnail': publication.get('cover_image'),

|

||||

'title': publication.get('title'),

|

||||

'subtitle': publication.get('subtitle'),

|

||||

'description': publication.get('description'),

|

||||

'published': publication.get('post_date'),

|

||||

'wordCount': publication.get('wordcount'),

|

||||

'pageContent': full_text,

|

||||

}

|

||||

|

||||

tokenCount = len(tokenize(full_text))

|

||||

item['token_count_estimate'] = tokenCount

|

||||

|

||||

totalTokenCount += tokenCount

|

||||

with open(pub_file_path, 'w', encoding='utf-8') as file:

|

||||

json.dump(item, file, ensure_ascii=True, indent=4)

|

||||

|

||||

print(f"[Success]: {len(valid_publications)} scraped and fetched!")

|

||||

print(f"\n\n////////////////////////////")

|

||||

print(f"Your estimated cost to embed all of this data using OpenAI's text-embedding-ada-002 model at $0.0004 / 1K tokens will cost {ada_v2_cost(totalTokenCount)} using {totalTokenCount} tokens.")

|

||||

print(f"////////////////////////////\n\n")

|

||||

exit(0)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

86

collector/scripts/substack_utils.py

Normal file

86

collector/scripts/substack_utils.py

Normal file

|

|

@ -0,0 +1,86 @@

|

|||

import os, json, requests, tempfile

|

||||

from requests_html import HTMLSession

|

||||

from langchain.document_loaders import UnstructuredHTMLLoader

|

||||

|

||||

def fetch_all_publications(subdomain):

|

||||

file_path = f"./outputs/substack-logs/substack-{subdomain}.json"

|

||||

|

||||

if os.path.isdir("./outputs/substack-logs") == False:

|

||||

os.makedirs("./outputs/substack-logs")

|

||||

|

||||

if os.path.exists(file_path):

|

||||

with open(file_path, "r") as file:

|

||||

print(f"Returning cached data for substack {subdomain}.substack.com. If you do not wish to use stored data then delete the file for this newsletter to allow refetching.")

|

||||

return json.load(file)

|

||||

|

||||

collecting = True

|

||||

offset = 0

|

||||

publications = []

|

||||

|

||||

while collecting is True:

|

||||

url = f"https://{subdomain}.substack.com/api/v1/archive?sort=new&offset={offset}"

|

||||

response = requests.get(url)

|

||||

if(response.ok == False):

|

||||

print("Bad response - exiting collection")

|

||||

collecting = False

|

||||

continue

|

||||

|

||||

data = response.json()

|

||||

|

||||

if(len(data) ==0 ):

|

||||

collecting = False

|

||||

continue

|

||||

|

||||

for publication in data:

|

||||

publications.append(publication)

|

||||

offset = len(publications)

|

||||

|

||||

with open(file_path, 'w+', encoding='utf-8') as json_file:

|

||||

json.dump(publications, json_file, ensure_ascii=True, indent=2)

|

||||

print(f"{len(publications)} publications found for author {subdomain}.substack.com. Saved to substack-logs/channel-{subdomain}.json")

|

||||

|

||||

return publications

|

||||

|

||||

def only_valid_publications(publications= []):

|

||||

valid_publications = []

|

||||

for publication in publications:

|

||||

is_paid = publication.get('audience') != 'everyone'

|

||||

if (is_paid and publication.get('should_send_free_preview') != True) or publication.get('type') != 'newsletter': continue

|

||||

valid_publications.append(publication)

|

||||

return valid_publications

|

||||

|

||||

def get_content(article_link):

|

||||

print(f"Fetching {article_link}")

|

||||

if(len(article_link) == 0):

|

||||

print("Invalid URL!")

|

||||

return None

|

||||

|

||||

session = HTMLSession()

|

||||

req = session.get(article_link)

|

||||

if(req.ok == False):

|

||||

print("Could not reach this url!")

|

||||

return None

|

||||

|

||||

req.html.render()

|

||||

|

||||

full_text = None

|

||||

with tempfile.NamedTemporaryFile(mode = "w") as tmp:

|

||||

tmp.write(req.html.html)

|

||||

tmp.seek(0)

|

||||

loader = UnstructuredHTMLLoader(tmp.name)

|

||||

data = loader.load()[0]

|

||||

full_text = data.page_content

|

||||

tmp.close()

|

||||

return full_text

|

||||

|

||||

def append_meta(publication, text):

|

||||

meta = {

|

||||

'url': publication.get('canonical_url'),

|

||||

'thumbnail': publication.get('cover_image'),

|

||||

'title': publication.get('title'),

|

||||

'subtitle': publication.get('subtitle'),

|

||||

'description': publication.get('description'),

|

||||

'createdAt': publication.get('post_date'),

|

||||

'wordCount': publication.get('wordcount')

|

||||

}

|

||||

return "Newsletter Metadata:\n"+json.dumps(meta)+"\n\nArticle Content:\n" + text

|

||||

10

collector/scripts/utils.py

Normal file

10

collector/scripts/utils.py

Normal file

|

|

@ -0,0 +1,10 @@

|

|||

import tiktoken

|

||||

encoder = tiktoken.encoding_for_model("text-embedding-ada-002")

|

||||

|

||||

def tokenize(fullText):

|

||||

return encoder.encode(fullText)

|

||||

|

||||

def ada_v2_cost(tokenCount):

|

||||

rate_per = 0.0004 / 1_000 # $0.0004 / 1K tokens

|

||||

total = tokenCount * rate_per

|

||||

return '${:,.2f}'.format(total) if total >= 0.01 else '< $0.01'

|

||||

0

collector/scripts/watch/__init__.py

Normal file

0

collector/scripts/watch/__init__.py

Normal file

58

collector/scripts/watch/convert/as_docx.py

Normal file

58

collector/scripts/watch/convert/as_docx.py

Normal file

|

|

@ -0,0 +1,58 @@

|

|||

import os

|

||||

from langchain.document_loaders import Docx2txtLoader, UnstructuredODTLoader

|

||||

from slugify import slugify

|

||||

from ..utils import guid, file_creation_time, write_to_server_documents, move_source

|

||||

from ...utils import tokenize

|

||||

|

||||

# Process all text-related documents.

|

||||

def as_docx(**kwargs):

|

||||

parent_dir = kwargs.get('directory', 'hotdir')

|

||||

filename = kwargs.get('filename')

|

||||

ext = kwargs.get('ext', '.txt')

|

||||

fullpath = f"{parent_dir}/{filename}{ext}"

|

||||

|

||||

loader = Docx2txtLoader(fullpath)

|

||||

data = loader.load()[0]

|

||||

content = data.page_content

|

||||

|

||||

print(f"-- Working {fullpath} --")

|

||||

data = {

|

||||

'id': guid(),

|

||||

'url': "file://"+os.path.abspath(f"{parent_dir}/processed/{filename}{ext}"),

|

||||

'title': f"{filename}{ext}",

|

||||

'description': "a custom file uploaded by the user.",

|

||||

'published': file_creation_time(fullpath),

|

||||

'wordCount': len(content),

|

||||

'pageContent': content,

|

||||

'token_count_estimate': len(tokenize(content))

|

||||

}

|

||||

|

||||

write_to_server_documents(data, f"{slugify(filename)}-{data.get('id')}")

|

||||

move_source(parent_dir, f"{filename}{ext}")

|

||||

print(f"[SUCCESS]: {filename}{ext} converted & ready for embedding.\n")

|

||||

|

||||

def as_odt(**kwargs):

|

||||

parent_dir = kwargs.get('directory', 'hotdir')

|

||||

filename = kwargs.get('filename')

|

||||

ext = kwargs.get('ext', '.txt')

|

||||

fullpath = f"{parent_dir}/{filename}{ext}"

|

||||

|

||||

loader = UnstructuredODTLoader(fullpath)

|

||||

data = loader.load()[0]

|

||||

content = data.page_content

|

||||

|

||||

print(f"-- Working {fullpath} --")

|

||||

data = {

|

||||

'id': guid(),

|

||||

'url': "file://"+os.path.abspath(f"{parent_dir}/processed/{filename}{ext}"),

|

||||

'title': f"{filename}{ext}",

|

||||

'description': "a custom file uploaded by the user.",

|

||||

'published': file_creation_time(fullpath),

|

||||

'wordCount': len(content),

|

||||

'pageContent': content,

|

||||

'token_count_estimate': len(tokenize(content))

|

||||

}

|

||||

|

||||

write_to_server_documents(data, f"{slugify(filename)}-{data.get('id')}")

|

||||

move_source(parent_dir, f"{filename}{ext}")

|

||||

print(f"[SUCCESS]: {filename}{ext} converted & ready for embedding.\n")

|

||||

32

collector/scripts/watch/convert/as_markdown.py

Normal file

32

collector/scripts/watch/convert/as_markdown.py

Normal file

|

|

@ -0,0 +1,32 @@

|

|||

import os

|

||||

from langchain.document_loaders import UnstructuredMarkdownLoader

|

||||

from slugify import slugify

|

||||

from ..utils import guid, file_creation_time, write_to_server_documents, move_source

|

||||

from ...utils import tokenize

|

||||

|

||||

# Process all text-related documents.

|

||||

def as_markdown(**kwargs):

|

||||

parent_dir = kwargs.get('directory', 'hotdir')

|

||||

filename = kwargs.get('filename')

|

||||

ext = kwargs.get('ext', '.txt')

|

||||

fullpath = f"{parent_dir}/{filename}{ext}"

|

||||

|

||||

loader = UnstructuredMarkdownLoader(fullpath)

|

||||

data = loader.load()[0]

|

||||

content = data.page_content

|

||||

|

||||

print(f"-- Working {fullpath} --")

|

||||

data = {

|

||||

'id': guid(),

|

||||

'url': "file://"+os.path.abspath(f"{parent_dir}/processed/{filename}{ext}"),

|

||||

'title': f"{filename}{ext}",

|

||||

'description': "a custom file uploaded by the user.",

|

||||

'published': file_creation_time(fullpath),

|

||||

'wordCount': len(content),

|

||||

'pageContent': content,

|

||||

'token_count_estimate': len(tokenize(content))

|

||||

}

|

||||

|

||||

write_to_server_documents(data, f"{slugify(filename)}-{data.get('id')}")

|

||||

move_source(parent_dir, f"{filename}{ext}")

|

||||

print(f"[SUCCESS]: {filename}{ext} converted & ready for embedding.\n")

|

||||

36

collector/scripts/watch/convert/as_pdf.py

Normal file

36

collector/scripts/watch/convert/as_pdf.py

Normal file

|

|

@ -0,0 +1,36 @@

|

|||

import os

|

||||

from langchain.document_loaders import PyPDFLoader

|

||||

from slugify import slugify

|

||||

from ..utils import guid, file_creation_time, write_to_server_documents, move_source

|

||||

from ...utils import tokenize

|

||||

|

||||

# Process all text-related documents.

|

||||

def as_pdf(**kwargs):

|

||||

parent_dir = kwargs.get('directory', 'hotdir')

|

||||

filename = kwargs.get('filename')

|

||||

ext = kwargs.get('ext', '.txt')

|

||||

fullpath = f"{parent_dir}/{filename}{ext}"

|

||||

|

||||

loader = PyPDFLoader(fullpath)

|

||||

pages = loader.load_and_split()

|

||||

|

||||

print(f"-- Working {fullpath} --")

|

||||

for page in pages:

|

||||

pg_num = page.metadata.get('page')

|

||||

print(f"-- Working page {pg_num} --")

|

||||

|

||||

content = page.page_content

|

||||

data = {

|

||||

'id': guid(),

|

||||

'url': "file://"+os.path.abspath(f"{parent_dir}/processed/{filename}{ext}"),

|

||||

'title': f"{filename}_pg{pg_num}{ext}",

|

||||

'description': "a custom file uploaded by the user.",

|

||||

'published': file_creation_time(fullpath),

|

||||

'wordCount': len(content),

|

||||

'pageContent': content,

|

||||

'token_count_estimate': len(tokenize(content))

|

||||

}

|

||||

write_to_server_documents(data, f"{slugify(filename)}-pg{pg_num}-{data.get('id')}")

|

||||

|

||||

move_source(parent_dir, f"{filename}{ext}")

|

||||

print(f"[SUCCESS]: {filename}{ext} converted & ready for embedding.\n")

|

||||

28

collector/scripts/watch/convert/as_text.py

Normal file

28

collector/scripts/watch/convert/as_text.py

Normal file

|

|

@ -0,0 +1,28 @@

|

|||

import os

|

||||

from slugify import slugify

|

||||

from ..utils import guid, file_creation_time, write_to_server_documents, move_source

|

||||

from ...utils import tokenize

|

||||

|

||||

# Process all text-related documents.

|

||||

def as_text(**kwargs):

|

||||

parent_dir = kwargs.get('directory', 'hotdir')

|

||||

filename = kwargs.get('filename')

|

||||

ext = kwargs.get('ext', '.txt')

|

||||

fullpath = f"{parent_dir}/{filename}{ext}"

|

||||

content = open(fullpath).read()

|

||||

|

||||

print(f"-- Working {fullpath} --")

|

||||

data = {

|

||||

'id': guid(),

|

||||

'url': "file://"+os.path.abspath(f"{parent_dir}/processed/{filename}{ext}"),

|

||||

'title': f"{filename}{ext}",

|

||||

'description': "a custom file uploaded by the user.",

|

||||

'published': file_creation_time(fullpath),

|

||||

'wordCount': len(content),

|

||||

'pageContent': content,

|

||||

'token_count_estimate': len(tokenize(content))

|

||||

}

|

||||

|

||||

write_to_server_documents(data, f"{slugify(filename)}-{data.get('id')}")

|

||||

move_source(parent_dir, f"{filename}{ext}")

|

||||

print(f"[SUCCESS]: {filename}{ext} converted & ready for embedding.\n")

|

||||

12

collector/scripts/watch/filetypes.py

Normal file

12

collector/scripts/watch/filetypes.py

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

from .convert.as_text import as_text

|

||||

from .convert.as_markdown import as_markdown

|

||||

from .convert.as_pdf import as_pdf

|

||||

from .convert.as_docx import as_docx, as_odt

|

||||

|

||||

FILETYPES = {

|

||||

'.txt': as_text,

|

||||

'.md': as_markdown,

|

||||

'.pdf': as_pdf,

|

||||

'.docx': as_docx,

|

||||

'.odt': as_odt,

|

||||

}

|

||||

20

collector/scripts/watch/main.py

Normal file

20

collector/scripts/watch/main.py

Normal file

|

|

@ -0,0 +1,20 @@

|

|||

import os

|

||||

from .filetypes import FILETYPES

|

||||

|

||||

RESERVED = ['__HOTDIR__.md']

|

||||

def watch_for_changes(directory):

|

||||

for raw_doc in os.listdir(directory):

|

||||

if os.path.isdir(f"{directory}/{raw_doc}") or raw_doc in RESERVED: continue

|

||||

|

||||

filename, fileext = os.path.splitext(raw_doc)

|

||||

if filename in ['.DS_Store'] or fileext == '': continue

|

||||

|

||||

if fileext not in FILETYPES.keys():

|

||||

print(f"{fileext} not a supported file type for conversion. Please remove from hot directory.")

|

||||

continue

|

||||

|

||||

FILETYPES[fileext](

|

||||

directory=directory,

|

||||

filename=filename,

|

||||

ext=fileext,

|

||||

)

|

||||

30

collector/scripts/watch/utils.py

Normal file

30

collector/scripts/watch/utils.py

Normal file

|

|

@ -0,0 +1,30 @@

|

|||

import os, json

|

||||

from datetime import datetime

|

||||

from uuid import uuid4

|

||||

|

||||

def guid():

|

||||

return str(uuid4())

|

||||

|

||||

def file_creation_time(path_to_file):

|

||||

try:

|

||||

if os.name == 'nt':

|

||||

return datetime.fromtimestamp(os.path.getctime(path_to_file)).strftime('%Y-%m-%d %H:%M:%S')

|

||||

else:

|

||||

stat = os.stat(path_to_file)

|

||||

return datetime.fromtimestamp(stat.st_birthtime).strftime('%Y-%m-%d %H:%M:%S')

|

||||

except AttributeError:

|

||||

return datetime.today().strftime('%Y-%m-%d %H:%M:%S')

|

||||

|

||||

def move_source(working_dir='hotdir', new_destination_filename= ''):

|

||||

destination = f"{working_dir}/processed"

|

||||

if os.path.exists(destination) == False:

|

||||

os.mkdir(destination)

|

||||

|

||||

os.replace(f"{working_dir}/{new_destination_filename}", f"{destination}/{new_destination_filename}")

|

||||

return

|

||||

|

||||

def write_to_server_documents(data, filename):

|

||||

destination = f"../server/documents/custom-documents"

|

||||

if os.path.exists(destination) == False: os.makedirs(destination)

|

||||

with open(f"{destination}/{filename}.json", 'w', encoding='utf-8') as file:

|

||||

json.dump(data, file, ensure_ascii=True, indent=4)

|

||||

55

collector/scripts/youtube.py

Normal file

55

collector/scripts/youtube.py

Normal file

|

|

@ -0,0 +1,55 @@

|

|||

import os, json

|

||||

from youtube_transcript_api import YouTubeTranscriptApi

|

||||

from youtube_transcript_api.formatters import TextFormatter, JSONFormatter

|

||||

from .utils import tokenize, ada_v2_cost

|

||||

from .yt_utils import fetch_channel_video_information, get_channel_id, clean_text, append_meta, get_duration

|

||||

from alive_progress import alive_it

|

||||

|

||||

# Example Channel URL https://www.youtube.com/channel/UCmWbhBB96ynOZuWG7LfKong

|

||||

# Example Channel URL https://www.youtube.com/@mintplex

|

||||

|

||||

def youtube():

|

||||

channel_link = input("Paste in the URL of a YouTube channel: ")

|

||||

channel_id = get_channel_id(channel_link)

|

||||

|

||||

if channel_id == None or len(channel_id) == 0:

|

||||

print("Invalid input - must be full YouTube channel URL")

|

||||

exit(1)

|

||||

|

||||

channel_data = fetch_channel_video_information(channel_id)

|

||||

transaction_output_dir = f"../server/documents/youtube-{channel_data.get('channelTitle')}"

|

||||

|

||||

if os.path.isdir(transaction_output_dir) == False:

|

||||

os.makedirs(transaction_output_dir)

|

||||

|

||||

print(f"\nFetching transcripts for {len(channel_data.get('items'))} videos - please wait.\nStopping and restarting will not refetch known transcripts in case there is an error.\nSaving results to: {transaction_output_dir}.")

|

||||

totalTokenCount = 0

|

||||

for video in alive_it(channel_data.get('items')):

|

||||

video_file_path = transaction_output_dir + f"/video-{video.get('id')}.json"

|

||||

if os.path.exists(video_file_path) == True:

|

||||

continue

|

||||

|

||||

formatter = TextFormatter()

|

||||

json_formatter = JSONFormatter()

|

||||

try:

|

||||

transcript = YouTubeTranscriptApi.get_transcript(video.get('id'))

|

||||

raw_text = clean_text(formatter.format_transcript(transcript))

|

||||

duration = get_duration(json_formatter.format_transcript(transcript))

|

||||

|

||||

if(len(raw_text) > 0):

|

||||

fullText = append_meta(video, duration, raw_text)

|

||||

tokenCount = len(tokenize(fullText))

|

||||

video['pageContent'] = fullText

|

||||

video['token_count_estimate'] = tokenCount

|

||||

totalTokenCount += tokenCount

|

||||

with open(video_file_path, 'w', encoding='utf-8') as file:

|

||||

json.dump(video, file, ensure_ascii=True, indent=4)

|

||||

except:

|

||||